Chip designers have long faced a tedious, manual process when debugging non-equivalent points in a netlist—the discrepancies between a reference design and an implemented one. Even with tools like GOF Debug, which offer powerful features like counter-example tracing on schematics, the designer must still be an active participant. They're required to manually read logic values and click through the design to trace the issue back to its source.

Enter the new era of AI Agent-driven debug automation. By leveraging the power of Large Language Models (LLMs), the GOF platform is now capable of turning this manual, graphical process into a fully automated one, significantly reducing debugging time and effort.

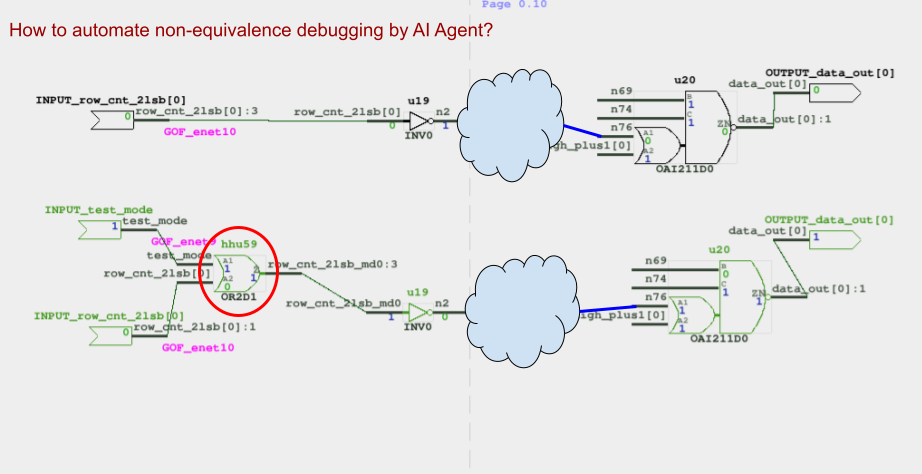

Figure 1: How to automate the schematic non-equivalence debugging?

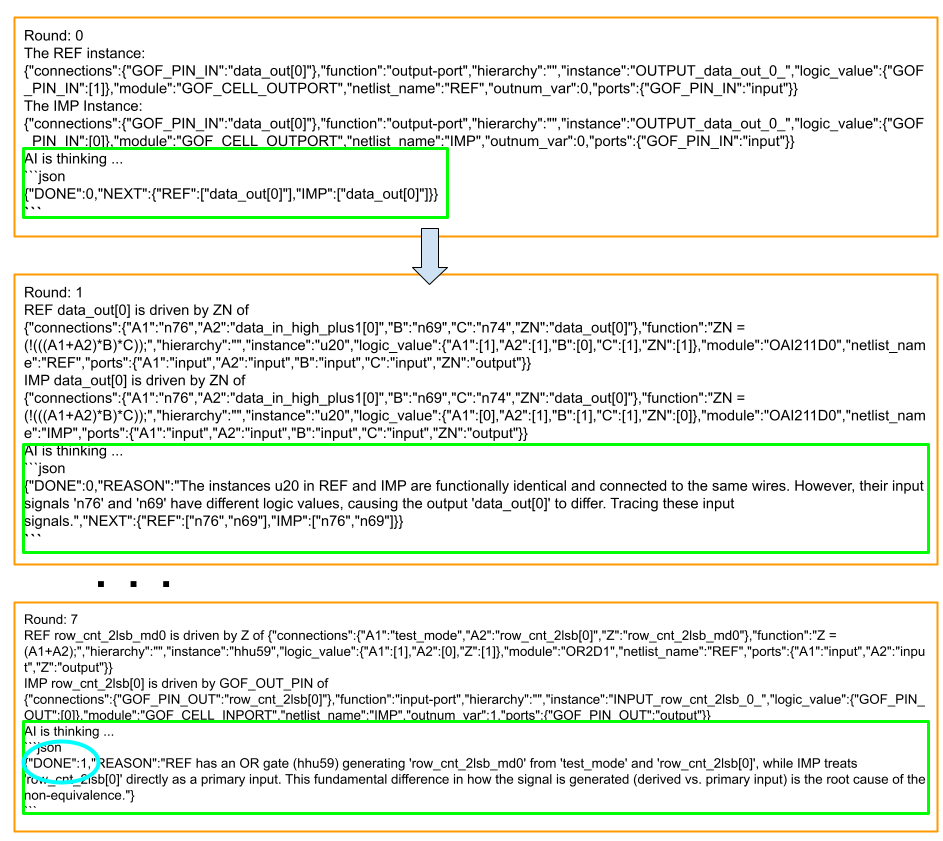

An AI agent, powered by a Large Language Model (LLM), automates the debugging process. Instead of a human, the AI agent takes charge.

Figure 2: AI Agent automates the debugging

Example script for AI Agent Non-equivalence debugging:

read_library("tsmc.lib"); read_design('-ref', "ref_netlist.v"); read_design('-imp', "imp_netlist.v"); set_ai_remote_server("localhost", 1998, 0, "___ENDQQ___", "___ENDAA___", "LLM_1_0_0"); ai_debug_noneq("data_out[0]");

This powerful AI agent-driven approach is not limited to just netlist non-equivalence issues. The same methodology can be applied to gate-level netlist simulation debugging. By feeding the AI agent with simulation waveforms and netlist data, it can analyze discrepancies between expected and simulated values. The agent then intelligently navigates the design to pinpoint the exact source of a functional error. This adaptability highlights the immense potential of AI agents to revolutionize a wide range of debugging and verification tasks throughout the entire chip design workflow.